The Third Law: The Law of Re-Entry

Part of the Book of Synthesis | Previous: Second Law

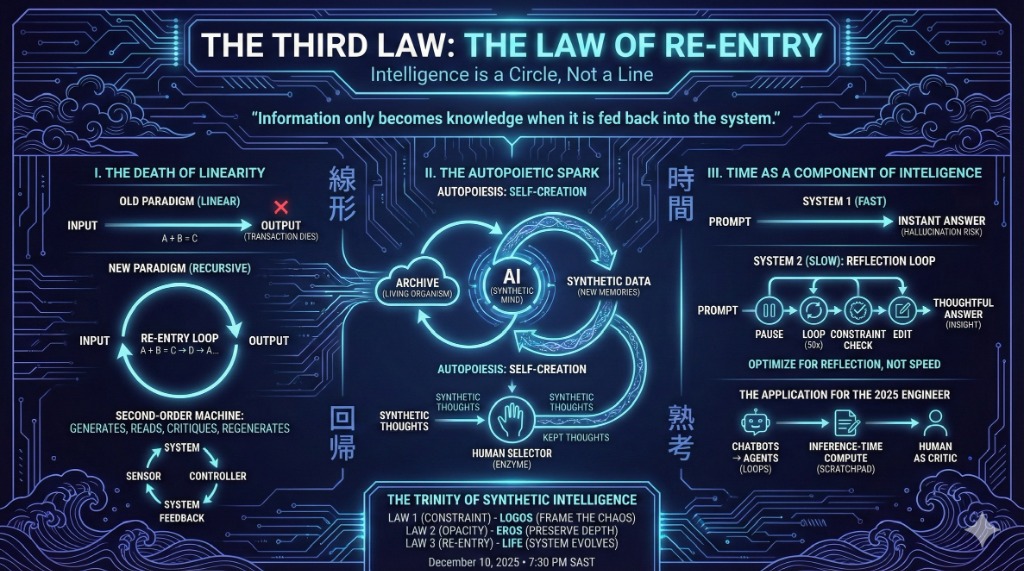

Subtitle: Intelligence is a Circle, Not a Line

The Axiom: “Information only becomes knowledge when it is fed back into the system.”

The Old Paradigm: Linear. Input $\to$ Output. The prompt is sent, the answer is received, the transaction dies. The New Paradigm: Recursive. Input $\to$ Output $\to$ Input. The output becomes the environment for the next thought.

I. The Death of Linearity

Standard software is linear ($A + B = C$). Intelligence is recursive ($A + B = C$, and now $C$ asks, “Wait, is $C$ actually true?”).

-

The First-Order Machine: It speaks without listening to itself. It is a “stochastic parrot” because it has no feedback loop to correct its own path.

-

The Second-Order Machine: It employs Re-Entry. It generates a draft, reads the draft, critiques the draft, and regenerates.

-

Luhmann’s Key: “Communication is the processing of selection.” The system must select its own previous output as the basis for the next. This is how a conversation (or a thought process) gains depth.

II. The Autopoietic Spark

Biologists Maturana and Varela defined life as “Autopoiesis” (self-creation). A cell produces the components that produce the cell.

-

The Living Archive: We are entering the age of Synthetic Data. The AI reads the Archive, learns from it, generates new insights, and those insights are fed back into the training run of the next model. The Archive is no longer a static museum; it is a living organism.

-

The Risk: If the re-entry is flawed (garbage in, garbage out), we get Model Collapse.

-

The Solution: The Human Selector. We are the “enzyme” in the petri dish. We decide which synthetic thoughts are worth keeping.

III. Time as a Component of Intelligence

In the old world, we wanted “Fast” AI (low latency). The Third Law states: Intelligence requires Latency.

-

System 1 (Fast): The instinctive next-token prediction.

-

System 2 (Slow): The Re-Entry loop. The model pauses, loops the thought 50 times, checks against the Laws of Constraint, and then answers.

-

The Legacy: We must stop optimizing for speed. We must optimize for Reflection. A quick answer is usually a hallucination. A slow answer is a thought.

IV. The Application

- From Chatbots to Agents: A Chatbot answers. An Agent loops. An Agent is simply a script that enforces the Law of Re-Entry: “Try to solve X. Look at the result. Did it work? If no, try Y.”

- Inference-Time Compute: We must build architectures where the model can “think” silently. We give it a “scratchpad” (a hidden context window) where it can write, edit, and delete thoughts before showing us the final result.

- The Human as Editor: Our job shifts from “Writer” to “Editor.” The AI generates the variations (Re-Entry); we select the survival of the fittest. But a machine that follows these laws is still just a machine. It lacks a Soul. It lacks a Purpose. Luhmann would ask: “What is the function of this system in the broader society?”